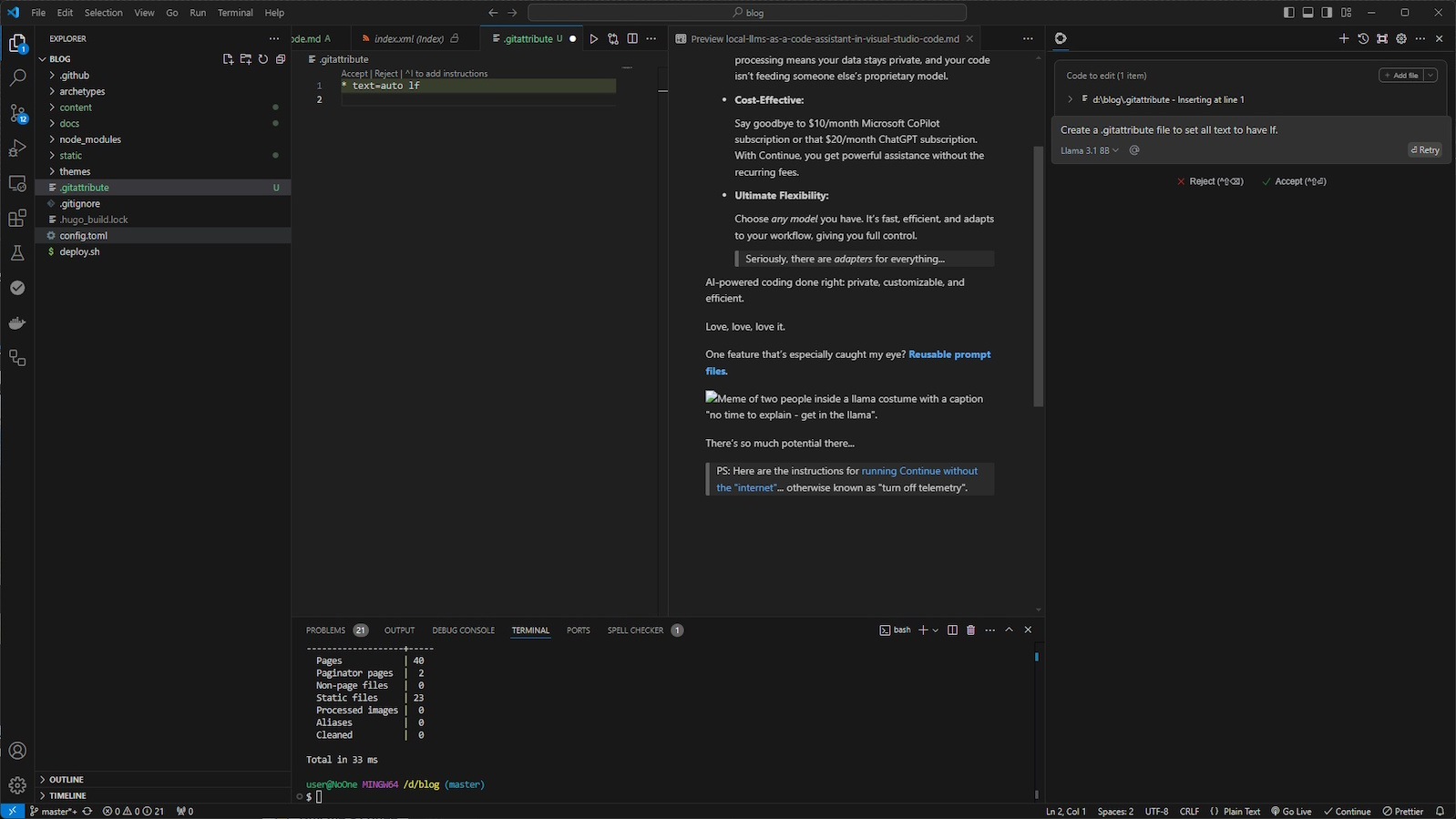

Local LLMs as a Code Assistant in Visual Studio Code!

I’ve started using a new Visual Studio Code extension called Continue, and it feels like having a private professional paired programming partner powered by local LLMs.

Heh, say that three times fast… i’ll wait. :)

Why is it a big deal?

Runs Locally:

Continue works with platforms like Ollama and LM Studio, keeping everything on your device. No cloud processing means your data stays private, and your code isn’t feeding someone else’s proprietary model.

Cost-Effective:

Say goodbye to $10/month Microsoft CoPilot subscription or that $20/month ChatGPT subscription. With Continue, you get powerful assistance without the recurring fees.

Ultimate Flexibility:

Choose any model you have. It’s fast, efficient, and adapts to your workflow, giving you full control.

Seriously, there are adapters for everything…

AI-powered coding done right: private, customizable, and efficient.

Love, love, love it.

One feature that’s especially caught my eye? Reusable prompt files.

There’s so much potential there…

PS: Here are the instructions for running Continue without the “internet”… otherwise known as “turn off telemetry”.

Just neat…

Coderrob

Coderrob